NEAR AI recently launched “private inference” and a “private chat” experience that promises a new level of data protection: your messages are processed inside cryptographically isolated environments called trusted execution environments (TEEs), meaning even cloud operators or NEAR AI technologists and operators shouldn’t be able to see your data.

It’s a major step forward for user-controlled AI for sure and something to be celebrated...but we need to be clear about what the limits of that privacy are.

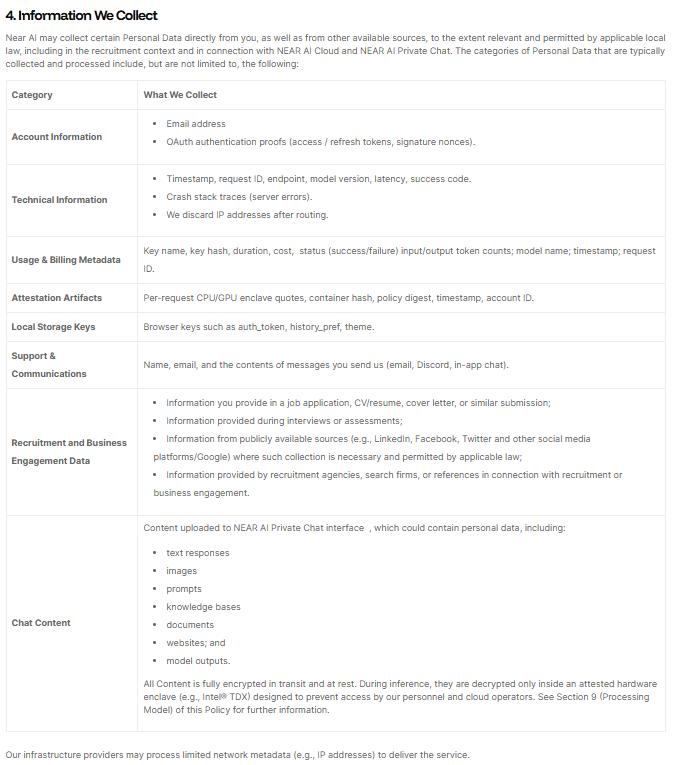

-excerpt from NEAR AI privacy policy (accessed 4 Dec 2025)

After recommending NEAR AI's private chat, several people asked how this service is actually private given (at the time of launch) they had to share identity/login with Github/Google and a couple even pointed me to the privacy policy detailing information being collected.

Since then, there is now an option to sign in with NEAR which eliminates the risk associated with Github/Google login (see below). Also appreciate NEAR AI recently adding the context to chat content block clearly indicating that even though collected (which is necessary to give you a history of your threads), it's encrypted and not accessible pointing to section 9 for more detail on processing.

Those are all very valid concerns in a product claiming to be "private", so they need to be addressed/answered or at very least, people should be able to clearly understand the risk they assume by using the service for whatever use they intend to use it for.

Thus, I am not writing this post to be alarmist or poke holes in NEAR AI's private inference and cloud offerings. I'm a huge fan. But, as with any emerging technology, the label “private” can be misunderstood, so this post aims to identify what risks exist and explains:

- What NEAR AI’s private chat actually protects

- Where the real vulnerabilities still exist

- When your chats could become exposed

- What “private” should mean for you as a user

The goal is simple: help you make informed decisions about how you use private AI tools.

Note: at time of writing, I notified the NEAR AI team of this post, asking them to comment on/clarify anything I've said that they feel is mitigated or addressed. I've made some changes based on initial feedback to date and notice recent clarifications added to their privacy policy which are much appreciated. I'm also hoping they intend to make some more updates to clarify a couple of lingering issues. I will continue to update with any additional feedback provided (then remove this notice). I'm more than happy to be told/proven wrong and that something in here is actually not an issue or a valid concern.

1. What NEAR AI’s “Private Chat” Actually Protects

NEAR AI runs your prompts and model responses inside secure hardware environments (Intel TDX and NVIDIA Confidential GPUs) that create a sealed box around the AI model.

Inside that box:

- Model weights are encrypted

- Your prompts are encrypted

- Outputs are encrypted

- Even the cloud provider hosting the hardware can’t see inside

- A cryptographic “attestation” proves the model and environment are genuine

This is a huge upgrade from traditional cloud AI, where your messages are visible to the model provider, cloud operator, and sometimes internal staff.

So far, this is excellent.

But like any system, the protection is limited to the part it controls which in this case is the actual AI inference. Everything before and after that step happens outside the secure box.

And that’s where the risks begin.

2. The Privacy Gaps Users Need to Understand

Even with world-class hardware privacy, several factors can expose your chats not because NEAR is doing something wrong, but because the system as a whole can only protect what it owns.

Here are the major gaps in plain language:

Gap 1: If you sign in with Google or GitHub

This means your “private” chat is tied directly to a real-world identity.

If your Google or GitHub account is ever:

- Hacked

- Phished

- Token-stolen

- Malware-compromised

…then someone could log in as you and access your chat history, metadata, or identity linkages.

The privacy of the TEE does not protect your identity.

Examples:

- Google OAuth Token Theft / EvilProxy Campaign (2023–2024): Reverse-proxy phishing platforms like EvilProxy enabled automated MFA bypass and OAuth token theft, letting attackers log in as victims.

Reference: https://www.resecurity.com/blog/article/evilproxy-phishing-as-a-service-with-mfa-bypass-emerged-in-dark-web

- GitHub OAuth Token Leak Incidents (2022–2024): Multiple third-party CI providers and apps leaked GitHub OAuth tokens, enabling attackers to impersonate users.

Reference: https://github.blog/news-insights/company-news/security-alert-stolen-oauth-user-tokens/

To mitigate this gap, highly recommend creating and signing in with a NEAR account where you control the keys/access yourself. You could even create a new non-identifiable NEAR account for every chat session if you really want to be safe (in event your keys are ever compromised somehow). Anyways, this feature was recently added and if chosen, completely mitigates/eliminates the sign in risk with Github/Google.

Gap 2: Your metadata may still be visible

NEAR AI runs both the gateway and the model inside TEEs (which protects the content of your messages), but metadata about your activity still exists outside the enclave. This is unavoidable because it’s generated before your request enters the secure environment.

That means systems outside the TEE can still see things like:

- who you logged in as (GitHub or Google)

- when you used the service

- your IP address and general location

- how often you sent requests

- which model you picked

- how big your requests were (not the content, just the size)

This metadata is needed for the service to operate (login, rate limits, billing, etc.), but it also means:

Your messages are private but your presence, identity, and usage patterns likely are not.

So while NEAR AI does an excellent job protecting the contents of your chat, it cannot hide the fact that you used the system in the first place.

Example:

- Strava Fitness App Metadata Leak (2018 “Heatmap” Incident): Strava published a global heatmap of user activity (metadata), which unintentionally exposed: secret military bases, patrol routes, soldier movement patterns.

No content leaked (only metadata) yet it exposed extremely sensitive information.Reference: https://www.wired.com/story/strava-heat-map-military-bases-fitness-trackers-privacy/

Gap 3: TEEs are strong but not invincible

Trusted execution environments have a history of vulnerabilities:

- Side-channel leaks

- GPU memory attacks

- Cache-timing inference

- Power analysis attacks

- Misconfiguration bugs

- Firmware flaws

Intel SGX, AMD SEV, and even NVIDIA’s new GPU TEEs have all seen real-world attacks.

No one - not NEAR, not AWS, not Intel, not NVIDIA - can guarantee absolute protection forever.

Examples:

- NVIDIA GPU TEE Side-Channel Attack (2024): Researchers demonstrated that GPU-based confidential computing environments could leak data through shared GPU caches and execution timing.

Reference: https://thehackernews.com/2023/09/researchers-uncover-new-gpu-side.html

- Intel SGX / AMD SEV TEE Breaks (Foreshadow, Plundervolt, SEVered, etc.):

Multiple attacks successfully extracted secrets or bypassed isolation guarantees in CPU-based TEEs proving TEEs evolve as vulnerabilities emerge.References:

- Foreshadow (Intel SGX): https://foreshadowattack.eu/

- Plundervolt: https://plundervolt.com/

- SEVered (AMD): https://arxiv.org/abs/1805.09604

Gap 4: Law enforcement can demand logs or metadata

Even if NEAR AI never stores your plaintext chats or can't access them, governments can legally compel:

- Identity information

- Access logs

- Metadata

- Billing records

- Authentication events

The U.S. CLOUD Act applies to most cloud-based services, even ones claiming strong privacy.

Example:

- U.S. CLOUD Act Compelled Access (2018–Present): The CLOUD Act allows U.S. authorities to compel cloud providers to turn over stored data, logs, or metadata even if hosted overseas or encrypted.

Reference: https://www.congress.gov/bill/115th-congress/house-bill/4943

Gap 5: Operational mistakes are always possible

History shows that many of the world’s biggest data leaks weren’t caused by sophisticated attacks - just mistakes:

Misconfigured buckets

Logging enabled by default

Debug data accidentally left on

Cross-tenant caching bugs

Broken access controls

Even perfectly encrypted inference cannot protect against human error in surrounding systems.

3. So… What Level of Privacy Are You Actually Getting?

The practical answer:

You are protected from:

- Cloud providers reading your data

- Model providers training on your prompts

- Insider access to plaintext content

- Traffic inspection or snooping during inference

- The AI model leaking your chat to third parties

You are NOT protected from:

- Identity exposure tied to Google/GitHub (if you sign in with Google/Github)

- Metadata collection

- Legal demands for logs

- Account compromise

- Hardware vulnerabilities (even if none are known now, they may emerge in future)

- Misconfiguration or operational bugs

In short:

NEAR AI private chat protects your content from the outside world,

but it cannot protect your *identity, metadata, or usage patterns.

*identity - you can significantly mitigate the issue by using a non-attributable NEAR account.

That’s still a big improvement but it isn’t absolute privacy.

4. When You Should & Shouldn’t Trust Private Chat

Potential good use cases

- Brainstorming

- Creative writing

- Code review

- Non-sensitive business work

- Research

- Anything non-personal

Caution advised for

- Medical details

- Legal issues

- Financial problems

- Government or security matters

- Whistleblowing

- Anything that could compromise you if linked to your identity

You probably shouldn’t assume private chat makes it completely safe to discuss anything/everything with the model.

The Vital Point

At Vital Point AI, I fully support NEAR’s push toward verifiable, user-owned AI but also believe users deserve total transparency about risks and limits.

Principles:

- Privacy must be observable, not just promised

- Metadata is still data

- Identity linkage is still exposure

- Secure enclaves reduce risk but cannot eliminate it

- Law-enforcement access is a legal obligation/could occur (with what is provided dependent on what is actually available)

While NEAR AI's private inference is not a perfect solution for the most sensitive categories of information, it does meaningfully reduce risk compared to using traditional cloud AI systems like ChatGPT, Gemini, Claude, or Copilot for the same tasks. If a user is already considering putting personal, medical, political, or otherwise sensitive data into a mainstream AI chatbots, choosing NEAR AI instead provides a substantial privacy upgrade. Your content is processed inside a hardware-secured enclave that neither NEAR, nor the model provider, nor the cloud operator can access. No training occurs on your data and no backend systems can view your plaintext.

This doesn’t make high-risk use cases “safe,” but it does make them significantly safer than using any conventional AI chatbot. Private inference cannot protect users from identity linkage, metadata exposure, or the consequences of sharing outputs themselves, but it does eliminate the biggest exposure vector in consumer AI today: provider-side visibility into your content. Put simply, for anyone who would otherwise trust a standard cloud AI with sensitive details, NEAR AI’s private chat offers a far stronger privacy posture and a much smaller attack surface.

AI privacy is NEAR.